Untangling the PostgreSQL upgrade

PostgreSQL 9.6 has just been released and most of the postgres users will start asking themselves how to upgrade to the new major version. This post has the intention of showing different procedures for upgrading your PostgreSQL server.

Upgrading to a new major version is a task which has a high ratio of preparation over total execution time. Specifically when skipping a release in the middle, for example, when you jump from version 9.3 to version 9.5.

Point releases

On the other hand, point release upgrades don’t need as much preparation. Generally, the only requirement is for the postgres service to be restarted. There are no changes to the underlying data structure, so there’s no need to dump and restore. In the worst case scenario you may need to recreate some of your indexes after you’ve finished upgrading the point release.

It’s very wise to always stay on the latest point release, so you don’t stumble over a known (and likely fixed) bug. This means that once the latest version is out, schedule time for the upgrade as soon as possible.

Major release upgrade

When doing complex tasks like this one, it’s good to consider all possible options to accomplish the final result.

For major release upgrades, there are three possible paths you can take:

- Upgrade restoring from a logical dump

- Physical upgrade

- On-Line upgrade

Let me explain each one in detail:

1) Upgrade restoring from a logical dump

This is the simplest of all possible ways to upgrade your cluster’s data structure.

To make it short, the process here requires a logical dump using pg_dump from the old version, and pg_restore on a clean cluster created with the newly installed version.

Key points in favor of using this path are:

- It’s the most tested

- Compatibility goes back to 7.0 versions so you could eventually upgrade from 7.x to one of the recent releases

Reasons why you should avoid using this option:

- The total downtime on large databases can be a problem, as you have to stop write connections before you start running pg_dump;

- If there are many large objects in the database, pg_dump will be slow. Even when doing it in parallel. Restore will be even slower than pg_dump when lots of large objects are stored in the database;

- It requires double disk space, or the removal of the old cluster before restoring;

On servers with several CPU cores, it’s possible to run pg_dump in parallel using the directory format. Once it has finished, restore in parallel as well, using the -j switch in both pg_dump and pg_restore. But you can’t start the restore process until the whole dump has finished.

2) Physical upgrade

These kind of upgrades have been available since version 9.0 to perform in-place upgrades from versions as old as 8.3. They are called “in-place” because they’re done over the same server, and preferably on the same data directory.

Advantages of these kind of upgrades:

- You don’t need space for another copy of the cluster.

- Downtime is much lower compared to using pg_dump.

There are quite a few disadvantages:

- Once you start the new version of PostgreSQL there’s no going back to the old version (cluster will work only with the new version from there on).

- Stats are zeroed after the upgrade, so right after starting the new version of PostgreSQL a cluster wide analyze has to be executed.

- There have been many bugs found over the past years regarding pg_upgrade, which makes some database administrators reluctant of using this method for upgrading.

- Some people have had problems when skipping a major release, for example going from version 9.2 to version 9.4.

- With large catalogs it will perform poorly (clusters with many databases, or databases with many thousands of objects).

You can also run pg_upgrade without the –link option (in this case pg_upgrade will generate a second copy on disk of your cluster) so you can go back to the old version. But you’ll lose both of the advantages listed above.

3) On-Line upgrade

The procedure to follow for this method would be like this:

- Install both versions so you can have them working in parallel. This can be done on the same server, or using two physical servers.

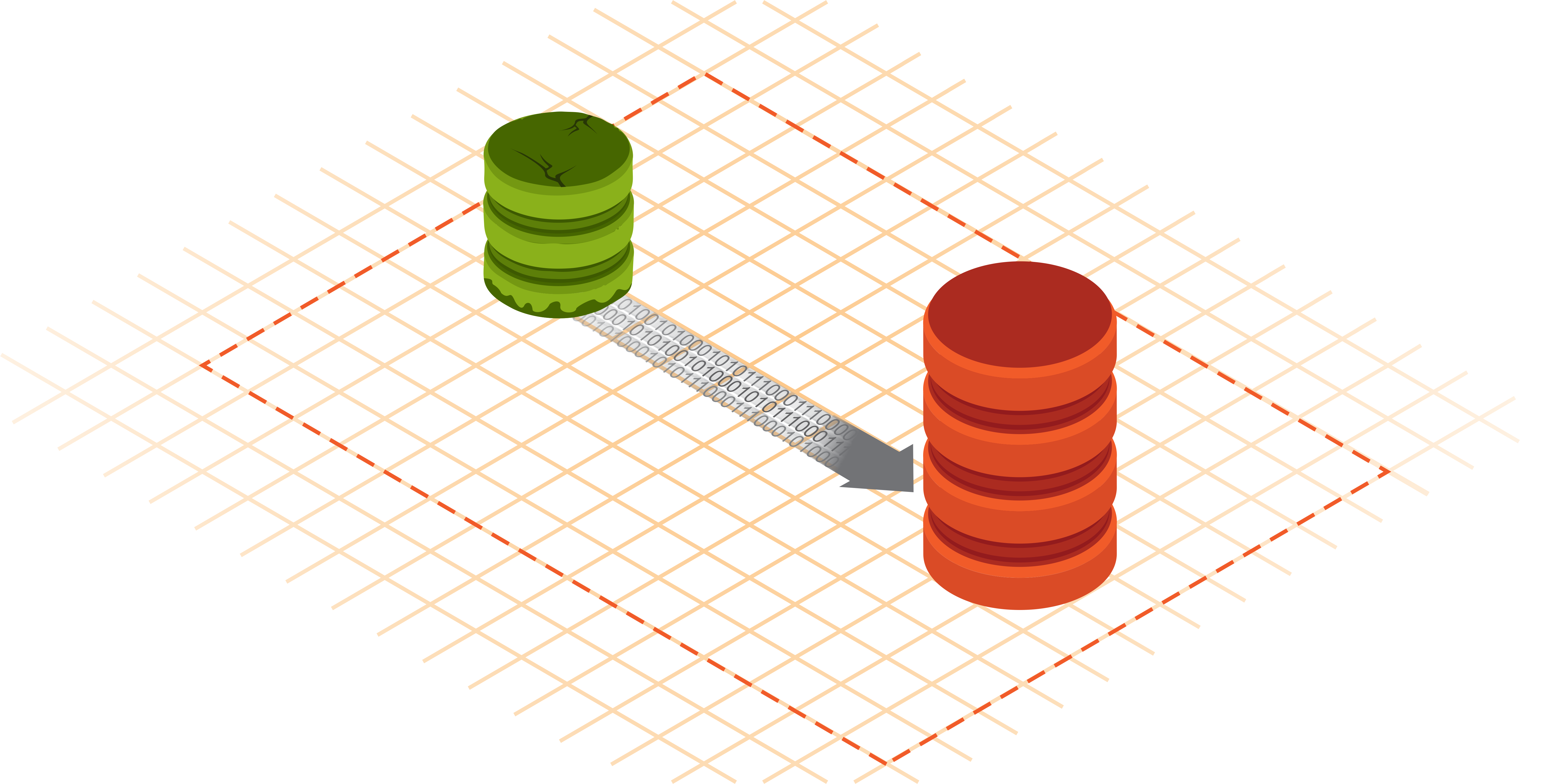

- Create an initial copy, and replicate the changes from the moment you started the copy on the source node (this would be similar to an initial pg_dump).

- Keep replicating logically the changes until the lag is close to zero.

- Repoint the connection info from the application server to connect to the new server.

This type of upgrade has been available since the first trigger based replication solutions were around. In other words, since Slony-I was around.

Trigger based replication solutions don’t care which version you have on one side or the other, as it copies the changes using supported SQL commands.

The trigger based replication tools recommended, in the order they appear are:

- skytools: PgQ + londiste

- Slony-I

This should be the preferred way for upgrading. Let’s see the advantages of using this method:

- Zero downtime!

- Great for upgrading to newer hardware as well.

- On-line testing of the cluster with the new version (read-only queries, or you might end up with conflicts).

- Reduces drastically all table and index bloats.

There are some disadvantages:

- It needs double storage space, as it has to store a second copy of your data.

- As it’s based on triggers, the system has to write each change twice, which means that there will me more disk I/O on the source server (old version of the cluster).

- All tables have to have a primary key, so the replication tool can individualize the tuples that are updated or deleted

- It doesn’t replicate catalog tables, so it won’t replicate large objects.

- Basic use doesn’t cover schema changes. It can be done, but not transparently.

A new frontier with pglogical

Since PostgreSQL 9.4 we have logical decoding of the transaction logs. This means that now we can decode the transactions from the WAL and manipulate the output.

A whole new world of possibilities appeared in the replication field. Triggers are no longer necessary to accomplish logical replication!

This basically means you don’t need to save a separate copy of all the inserts, updates and deletes using triggers any more. You can just get those data manipulation operations from the transaction logs, by decoding it. Then, it’s the tool you’re using that has to manipulate the output and send it over to the subscribed downstream node. In this case that tool is pglogical.

So, what does this all mean?

Well, if you are on a version 9.4 or 9.5 of PostgreSQL and you want to upgrade to 9.6, you can perform an online upgrade like the one detailed above, but using pglogical instead of one of the trigger based replication solutions.

I won’t go further into details as there are other blogs on this particular topic, like this one written by Petr Jelinek: PGLogical 1.1 packages for PostgreSQL 9.6beta1

Conclusions

Depending on the schema of your database, the data size, possible down time window, version of the current PostgreSQL installation you might choose one option over another or whatever fits best.

- If your database is small and there’s a suitable maintenance window you can use, you could opt for running a pg_dump and pg_restore. Depending on the size of the data set there’s a point where the option isn’t feasible anymore.

- If you have a large database (several hundreds of GBs, or even TBs of data) you’ll have to look for other options like an in-place upgrade with pg_upgrade or an online upgrade.

- If upgrading from version 8.3 or higher to any 9.x version you may use pg_upgrade.

- For versions older than 8.3, you’ll have to opt for one of the logical replication solutions like londiste or slony.

- If on a 9.4 or 9.5 database you are better off using pglogical.

Always remember that for logical replication the tables have to have a Primary Key.

With pglogical that rule isn’t so strict: You can replicate insert-only tables. But for update and deletes, it’s still mandatory to have a Primary Key or a REPLICA IDENTITY on the table.

If you’re in need of help for upgrading your PostgreSQL database, you can check the

2ndQuadrant Upgrade services.

Nitpicking: “you’ll loose” should be “you’ll lose”

Thanks, and fixed.

> Some people have had problems when skipping a major release, for example going from version 9.2 to version 9.4.

What problem?

If you are asking about those particular versions (9.2 → 9.4), that was just to give an example of what I meant with skipping a release. (maybe I should have made that a bit clearer in the post)

I personally never bumped into issues like this, even while skipping 2 releases (we did a 9.0 → 9.3 upgrade with –link). There has been people coming up on IRC or pgsql-general with issues after a pg_upgrade that jumped a version.

One of them was regarding hstore extension and a user doing an upgrade that skipped the version where the => operator was deprecated.

https://www.postgresql.org/message-id/4b5993299ed948728e2ad6e278861807%40NAIAD.omni.imsweb.com

So in the end, it mostly depends on the schema of the database, extensions you use, etc.

What method did you use for the 9.0 to 9.3 upgrade?

I did a 9.0 → 9.3 upgrade some years ago and the customer asked for a pg_upgrade.

It went smooth, even though it was my first production upgrade with pg_upgrade 🙂

Physical upgrade :- You don’t need space for another copy of the cluster.

Can we have some example here

Hi,

Those upgrades are the ones performed with pg_upgrade using link mode (check the –link option of pg_upgrade)

The advantage here is that the new version of the data directory is created using hard links which is very fast, and after that pg_upgrade does it’s magic. But as the new data directory is actually linked to the old one it will modify the already existing files.

That’s why there’s no going back once you start the new server.